MCP vs gRPC: How AI Agents & LLMs Connect to Tools & Data

Exploring LLM middleware options that compare old and new world methods, and when and why to select each one.

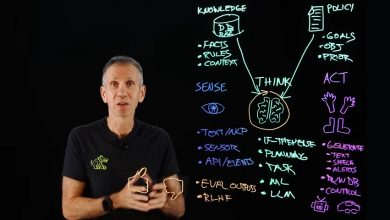

LLMs connecting to external data sources, such as databases, APIs, knowledge bases, or real-time feeds is crucial for tasks like RAG tool calling, or handling dynamic workloads.

LLMs connecting to external data sources, such as databases, APIs, knowledge bases, or real-time feeds is crucial for tasks like RAG tool calling, or handling dynamic workloads.

This enables LLMs to fetch real-time data, execute functions, or integrate with enterprise systems without being limited to static training data.

Interconnecting technologies aka ‘middleware’ has been around for a long time, so an interesting dynamic for the AI era is the question of how much a new solution type is needed specifically for AI, versus simply using standards and tools already developed for this function.

To explore this question the feature video from IBM compares two protocols:

MCP (Model Context Protocol): An open-source protocol developed by Anthropic (introduced in late 2024). It’s designed specifically for secure, standardized communication between LLMs (or AI agents) and external tools/data sources. MCP acts as a middleware layer, allowing LLMs to request context (e.g., data snippets, files, or API responses) in a structured, permission-bound way. It’s particularly useful for “computer use” scenarios where the LLM needs to interact with a user’s environment, like browsing files or querying databases, while enforcing safety and boundaries.

gRPC (Google Remote Procedure Call): A high-performance, open-source RPC framework from Google (originally released in 2015, with ongoing updates). It uses Protocol Buffers (Protobuf) for serialization and HTTP/2 for transport, enabling efficient client-server interactions. For LLMs, gRPC serves as a general-purpose method to expose external data sources as callable services (e.g., microservices wrapping a database), allowing the LLM to invoke remote procedures to fetch or process data.

Both can facilitate LLM workloads, but MCP is AI-native and context-focused, while gRPC is a broader infrastructure tool adapted for AI integrations. Below is a detailed comparison across key dimensions relevant to AI LLMs.

Comparisons

MCP

-

- Primary Purpose: Standardized, secure fetching of contextual data (e.g., files, DB queries) for LLMs to augment responses or perform actions. Optimized for AI agent-tool interactions.

- Design Focus: AI-specific: Emphasizes “context” provision with built-in safeguards like user permissions, resource boundaries, and sandboxing to prevent hallucinations or unauthorized access.

- Protocol & Transport: JSON-based requests over WebSockets or HTTP; lightweight and human-readable. Supports streaming for real-time context.

- Performance: Good for moderate data volumes; overhead from JSON and security checks. Latency ~50-200ms in typical setups; scales well for agentic workflows but not ultra-high-throughput.

- Ease of Integration for LLMs: High: Plug-and-play with supported LLMs (e.g., Claude). Frameworks like Anthropic’s SDK handle orchestration; defines “tools” natively for RAG or multi-step reasoning. Minimal boilerplate for AI devs.

- Security & Reliability: Strong AI-focused security: Built-in consent prompts, access controls, and isolation (e.g., no direct system access). Reliable for bounded tasks; fault-tolerant with retry logic in AI SDKs.

- Language & Ecosystem Support: Growing AI ecosystem: Native in Anthropic tools; supports Python, JS. Limited to contexts where MCP servers are deployed (e.g., local or cloud agents).

- Scalability: Horizontal scaling via MCP hosts/servers; best for per-user sessions or small teams. Handles concurrent contexts but not designed for massive parallel RPCs.

- Use Cases in LLM Workloads: RAG with secure file/DB access (e.g., querying private docs without exposing keys), Agentic tasks like code execution or browsing in controlled environments and completing workloads needing “memory” extension (e.g., pulling from Notion or GitHub).

- Limitations for AI: Narrow scope: Primarily context-fetching, not general computation. Adoption still emerging (as of 2025, mainly Anthropic-aligned). Less flexible for non-AI protocols.

- Cost & Maintenance: Low: Open-source, minimal infra (run MCP servers on existing hardware). Maintenance focused on policy configs for security.

gRPC

-

- Primary Purpose: High-performance RPC for any distributed system, including exposing data sources as services for LLMs to call (e.g., via function calling in frameworks like LangChain or Semantic Kernel).

- Design Focus: General-purpose: Focuses on efficient, language-agnostic service calls; AI adaptations require custom wrappers (e.g., defining Protobuf schemas for data queries).

- Protocol & Transport: Binary Protocol Buffers over HTTP/2; supports streaming, multiplexing, and bidirectional communication. More compact and faster for large payloads.

- Performance: Excellent: Low latency (~10-50ms), high throughput (up to 10x faster than REST/JSON due to binary format and HTTP/2 features). Ideal for real-time data fetching in high-scale LLM apps.

- Ease of Integration for LLMs: Medium: Requires defining .proto schemas, generating stubs (in Python, Go, etc.), and integrating via LLM orchestration tools (e.g., gRPC endpoints in Vercel AI or Hugging Face). More setup for AI-specific needs like token-aware streaming.

- Security & Reliability: Robust: Supports TLS, authentication (e.g., OAuth, mTLS), and deadlines. Reliable with built-in retries and load balancing; but security depends on implementation—no innate AI safeguards like permission gating.

- Language & Ecosystem Support: Broad: Supports 10+ languages (Python, Java, Go, etc.). Mature ecosystem with Kubernetes integration; widely used in microservices for data sources like BigQuery or vector DBs.

- Scalability: Highly scalable: Built for cloud-native apps; integrates with service meshes (e.g., Istio) for millions of requests/sec. Suited for enterprise LLM deployments with heavy data traffic.

- Use Cases in LLM Workloads: High-speed API calls to external services (e.g., weather data, stock APIs for real-time augmentation), microservices architecture for vector search or database queries in production LLMs, streaming responses for long-context workloads (e.g., processing large datasets).

- Limitations for AI: Not AI-native: Requires extra layers for LLM-friendly features like natural language tool descriptions (e.g., via OpenAPI conversions). Binary format harder to debug in AI prototyping.

- Cost & Maintenance: Variable: Free core, but scaling requires cloud resources (e.g., gRPC gateways). Higher maintenance for schema evolution and versioning in dynamic AI apps.

Key Insights for AI LLM Applications

When to Choose MCP: Opt for it in security-sensitive, agent-heavy workflows where the LLM needs safe, contextual data pulls (e.g., enterprise compliance scenarios). It’s simpler for AI developers focused on reasoning chains rather than raw performance, reducing risks like data leakage.

When to Choose gRPC: Ideal for performance-critical integrations, such as productizing LLMs with real-time external APIs or handling big data sources. It’s battle-tested in non-AI domains but shines in hybrid setups where LLMs orchestrate microservices.

Hybrid Approaches: In advanced systems, combine them—MCP for high-level orchestration and gRPC for backend data services. Benchmarks (e.g., from 2025 AI infra reports) show gRPC edging out in speed for >1MB payloads, while MCP wins on setup time (2-5x faster integration for AI tasks).

Trade-offs: MCP prioritizes safety and simplicity for AI-specific needs, potentially at the cost of raw speed. gRPC offers versatility and efficiency but demands more engineering effort to make it LLM-friendly.

Both are evolving rapidly; check latest docs (Anthropic for MCP, grpc.io for gRPC) for updates in AI integrations.