Building Intelligent Agents with Gemma 3

Explore the development of intelligent agents using Gemma models, with core components that facilitate agent creation, including capabilities for function calling, planning, and reasoning.

Building intelligent agents with Gemma 3, a family of lightweight, open-source generative AI models developed by Google, involves harnessing its advanced capabilities to create autonomous, proactive systems capable of performing complex tasks.

Building intelligent agents with Gemma 3, a family of lightweight, open-source generative AI models developed by Google, involves harnessing its advanced capabilities to create autonomous, proactive systems capable of performing complex tasks.

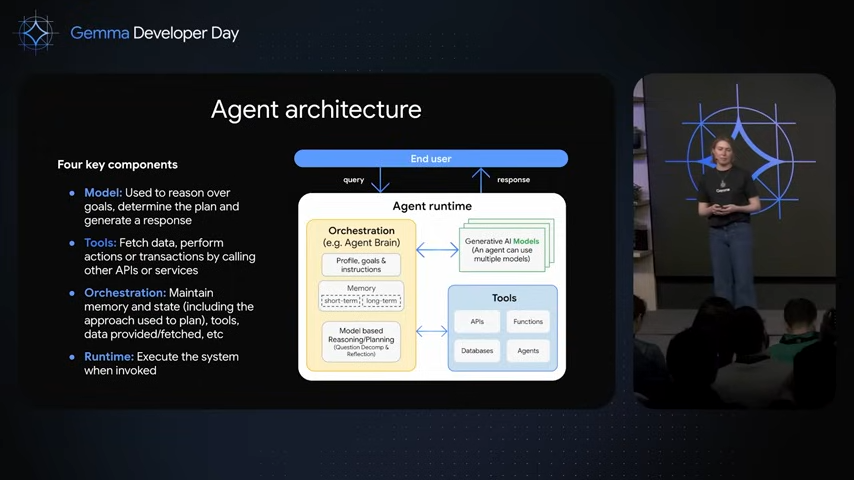

Unlike reactive chatbots that merely respond to prompts, intelligent agents are designed to act independently, reason through problems, interact with external tools, and adapt to diverse inputs such as text, images, and videos.

Gemma 3, built on the same technology as Google’s Gemini models, is optimized for efficiency, running on a single GPU or TPU, and offers features like multimodal understanding, function calling, and a 128K-token context window, making it an ideal choice for creating agentic AI systems.

Intelligent Agents

The process of building an intelligent agent with Gemma 3 begins with defining its purpose and goals. For instance, a YouTube Search Agent might be designed to process user queries, fetch relevant video results via the YouTube API, and provide summarized outputs.

Selecting the appropriate Gemma 3 model size is the next step, with options ranging from the text-only 1B model for low-resource devices to the multimodal 4B, 12B, or 27B models for more complex tasks involving vision and text.

Quantized versions of these models further enhance efficiency, enabling deployment on consumer-grade hardware like laptops or mobile devices using Gemma 3n, which is optimized for on-device applications.

Setting up the development environment involves installing dependencies such as Google’s Agent Development Kit (ADK), the Model Context Protocol (MCP) for tool integration, and platforms like Ollama for local model hosting. Function calling is a critical feature, allowing the agent to interact with external APIs or tools, such as invoking a YouTube search function when a user asks for video recommendations.

The ReAct prompting style can guide the agent’s reasoning by structuring its thought process into reasoning, action, and observation phases. For multimodal tasks, Gemma 3’s vision capabilities enable the agent to process images or short videos, such as describing an image or extracting text, which is particularly useful for applications requiring diverse input types.

Implementing the agent’s workflow involves using frameworks like ADK or LangChain to manage query processing, tool interactions, and response generation.

For example, a YouTube Search Agent would receive a user query through a user interface like Gradio, process it with Gemma 3 to determine intent, call the YouTube API via MCP, and format the results into a coherent response. Fine-tuning Gemma 3 can further enhance performance for specific tasks, such as tailoring a financial agent to analyze market data or a gaming agent to generate themed content.

Fine-Tuning

Fine-tuning can be done using tools like Hugging Face Transformers or Axolotl, with techniques like Reinforcement Learning from Human Feedback (RLHF) to align the model with desired behaviors.

Deploying and testing the agent requires careful consideration of the deployment environment, whether local, cloud-based using Google’s Vertex AI, or on-device for mobile apps via Google AI Edge. Testing should include logging and observability to debug issues like incorrect function calls or model hallucinations.

Tools like Gemma Scope can help analyze model behavior to ensure reliability. Continuous iteration, including refining prompts and adjusting tool integrations, is essential for optimizing performance. For use cases like content generation, customer support, or research assistance, Gemma 3’s multilingual support and large context window make it versatile for global and data-intensive applications.

Conclusion

Despite its strengths, building agents with Gemma 3 comes with challenges. Function calling may require careful prompt engineering to ensure reliability, and complex workflows can demand significant computational resources, though quantized models mitigate this.

Developers must also adhere to responsible AI guidelines, using tools like ShieldGemma for content moderation if needed. Resources like the Gemma 3 Developer Guide and Gemma Cookbook provide valuable examples and tutorials for building agents, while platforms like Hugging Face and Ollama simplify model access and deployment.

By leveraging Gemma 3’s capabilities and ecosystem, developers can create powerful, efficient, and accessible intelligent agents tailored to a wide range of applications.