Reinforcement Learning for Agents

In the world of AI, reinforcement learning is how we create agents that figure out how to make smart choices in complex environments.

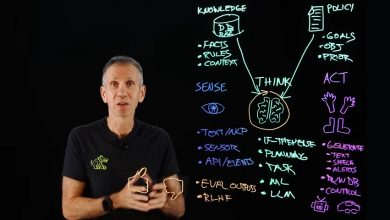

Reinforcement Learning (RL) for agents is a machine learning paradigm where an AI agent learns to make decisions by interacting with an environment to maximize a cumulative reward.

Reinforcement Learning (RL) for agents is a machine learning paradigm where an AI agent learns to make decisions by interacting with an environment to maximize a cumulative reward.

The agent observes the environment’s state, takes actions, and receives feedback in the form of rewards or penalties based on the consequences of its actions.

Over time, the agent refines its decision-making policy to optimize long-term rewards.

The Power of Learning by Doing

Imagine teaching a child to ride a bike. You don’t give them a manual filled with equations or rules. Instead, they hop on, wobble, fall, and try again, learning through trial and error what keeps them balanced. Each success—a few pedals forward—brings joy, while each tumble teaches a lesson.

Over time, they master the bike, riding with confidence. This is the essence of Reinforcement Learning (RL), a fascinating approach to building artificial intelligence (AI) that learns the way we often do: by experimenting, adapting, and improving.

In the world of AI, reinforcement learning is how we create agents—think of them as digital learners, like virtual players, robots, or smart assistants—that figure out how to make smart choices in complex environments. Whether it’s an AI mastering a video game, a robot navigating a cluttered room, or a self-driving car steering through traffic, RL empowers these agents to learn from their actions.

They observe their surroundings, try different moves, and earn “rewards” for good outcomes (like scoring a goal) or face setbacks for mistakes (like crashing into a wall). Through this cycle of doing, learning, and refining, they get better, often surpassing human skill in tasks once thought impossible.

But RL isn’t just about cool tech. It’s about solving hard problems. Teaching an AI to learn through experience is like training a curious mind—it’s thrilling but tricky. Agents need to balance trying bold new ideas with sticking to what works, all while chasing rewards that might be rare or far off.

And in the real world, where mistakes can be costly (imagine a self-driving car learning on a busy highway), we must ensure they learn safely and ethically.